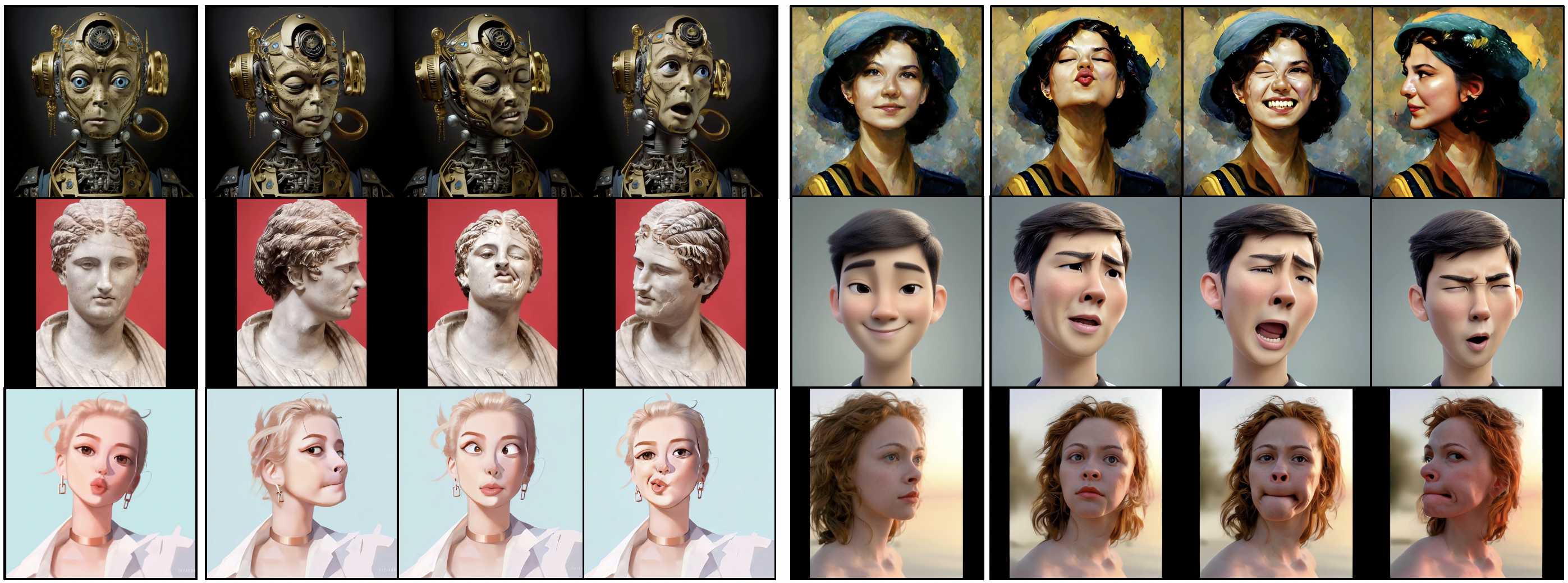

X-Portrait: Expressive Portrait Animation with Hierarchical Motion Attention

SIGGRAPH 2024

ByteDance

Please turn on speaker.

Source portraits: www.pexels.com, www.midjourney.com, www.deviantart.com

Driving video: www.reddit.com

Please turn on speaker.

Source portraits: www.pexels.com, www.midjourney.com, www.deviantart.com

Driving video: www.reddit.com

Please turn on speaker.

Source portraits: www.pexels.com, www.midjourney.com, www.deviantart.com

Driving video: www.reddit.com

Please turn on speaker.

Source portraits: www.pexels.com, www.midjourney.com, www.deviantart.com

Driving video: www.reddit.com

Please turn on speaker.

Source portraits: www.pexels.com, www.midjourney.com, www.deviantart.com

Driving video: www.reddit.com

Please turn on speaker.

Source portraits: www.pexels.com, www.midjourney.com, www.deviantart.com

Driving video: www.reddit.com

Please turn on speaker.

Source portraits: www.pexels.com, www.midjourney.com, www.deviantart.com

Driving video: www.reddit.com